Each only represents a small portion of the world and can do so in a peculiar way - capturing some features but ignoring many others. He argued that what distinguishes logical, iconic and distributed representations is what they can or cannot represent about the world. In his article “Representational Genera,” Haugeland noted that humans use many kinds of representations, like the pictures we frame and hang around the house or the descriptions that fill books. The Distinction Between Logical And Iconic It treats common sense not as a matter of knowing things about the world, but a matter of doing things in the world. Haugeland opened up yet another approach to common sense - which he did not take - centered around neural networks , which are a kind of “distributed representation.” This way of representing the world is less familiar than logical and iconic, but arguably the most common. Another approach would be to base common sense around mental images, like a massive model of the world our brains can consult. Many observers seem to imagine common sense as a matter of words, such as a bunch of sentences in the head cataloging the beliefs a person holds. In many criticisms of AI, one that CLIP and DALL-E expose, the meaning of common sense is ambiguous. Although the results - as with all contemporary AI - have their mix of jaw-dropping successes and embarrassing failures, their abilities reveal some insight into how representations inform us about the world. Both are multimodal neural networks, artificial intelligence systems that discover statistical regularities in massive amounts of data from two different ways of accessing the same situation, such as vision and hearing.ĬLIP and DALL-E are fed words and images and must discern correspondences between specific words and objects, phrases and events, names and places or people, and so on. CLIP can provide descriptions of what is in an image DALL-E functions as a computational imagination, conjuring up objects or scenes from descriptions.

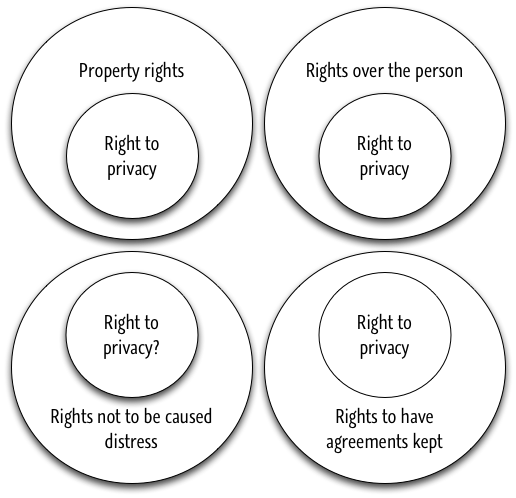

Which is why OpenAI’s recently released neural networks, CLIP and DALL-E, are such a surprise. Understanding representations, Haugeland wrote, depends on “general background familiarity with the represented contents - that is, on worldly experience and skill.” It is our familiarity with representations, like the “logical representations” of words and the “iconic representations” of images, that allow us to ignore scribbles on paper or sounds and instead grasp what they are about - what they are representing in the world. In a seminal paper, “ Representational Genera,” the late philosopher of AI John Haugeland argued that a unique feature of human understanding, one machines lack, is an ability to describe a picture or imagine a scene from a description. Central to many of these criticisms is the idea that machines don’t have “common sense,” such as an artificial intelligence system recommending you add “hard cooked apple mayonnaise” or “heavy water” to a cookie recipe.

Jacob Browning is a postdoc in NYU’s Department of Computer Science working on the philosophy of AI.įor as long as people have fantasized about thinking machines, there have been critics assuring us of what machines can’t do.

0 kommentar(er)

0 kommentar(er)